Monthnotes May 2025

Every month or so, I share a quick digest of what I've been working on and reading. Here's the latest. More in the series here.

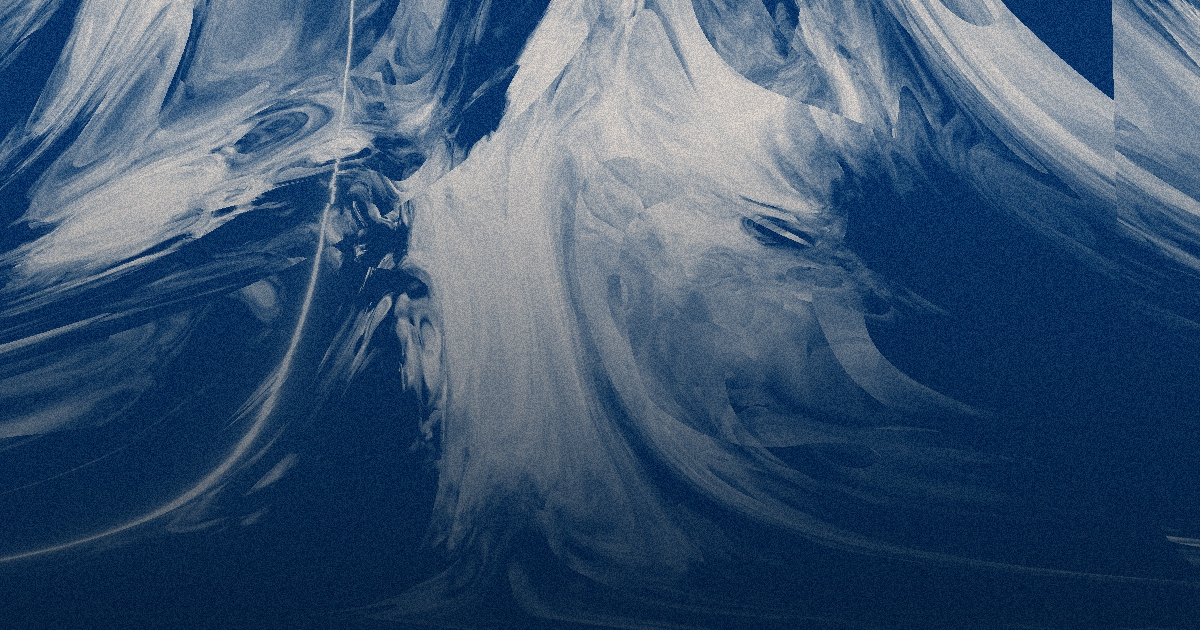

Last month I promised I'd talk a bit more about the web-poetry-with-cycles project that I've been working on, which now has a name - Siktdjup.

Siktdjup has a few layered meanings but primarily refers to how deep you can see into a body of water. This obviously depends on the water's cloudiness, which in English we call "turbidity", but we don't really have a direct translation for "siktdjup". Maybe "opacity"? I dunno. We're calling the project "Siktdjup" in English too - pronounced "sikt-yoop".

You can check it out at siktdjup.xyz. Click, tap, or use the space bar to move through the lines of the poem. You'll likely get trapped in a cycle at some point - there are ways to escape these cycles, but you'll need to figure that out yourself.

The poem was written by Anna Arvidsdotter. The shaders in the background were made by Simon David Ryden. I built the interface, mostly using P5.js. You can choose to read in Swedish or English by clicking the button on the screen that pops up when the page first loads.

We'll be showing it as part of the Present Tense exhibition during Southern Sweden Design Days in May. We'll also be doing an audiovisual performance of the piece, where my code contributions will be replaced with musical contributions. That'll be on the afternoon of 24 May at STPLN in Malmö. I'll try and get some sort of recording, because our practices so far are sounding great. More next time.

Also in the music sphere, I've been working with a couple of friends on a band we're calling "Tom Runs Alone" (name inspo). We'll be playing our first show on 7 June, in the same tiny basement that I premiered my Carrington Event post-rock sonification piece last year. I'll likely have more to say about this later in the year.

I've been doing some advising work on a data-driven theatre piece by Zsófi Rebeka Kozma, about being an immigrant worker in the bars around Möllevångstorget - Malmö's going-out district, and a vital part of the history of the country's labour movement.

"Data-driven theatre" might sound a bit weird, but Zsófi does a great job of breaking down her experiences through big and small datasets. In one part, she dumps a load of receipts onto the ground, and sweeps them into different-sized piles to show how much of the bar's nightly take goes to staff, to expenses, to tax, etc. In another part, she gets the audience to stand up and sit down depending on whether they've experienced different things that she describes. In another, she runs around the stage picking up and stacking cups, smiling inanely at the audience in the process, to illustrate how many bars she had to visit before being able to even get a job in the first place - and how many more her friend from a middle-eastern background had to visit.

It's fantastic and very affecting. If you're in the vicinity of Malmö, then go get yourself a (free) ticket from the Teaterhögskolan website for the performances in the next couple of days.

Shouting at clouds: why is everyone suddenly calling a podcast a "pod"? I hate it. Is it because no-one owns iPods any more? See also: blog/blogpost.

I mentioned last month that I've been participating in a workshop called "Forbidden Music" at Malmö University. The goal was to produce musical artefacts linked to the phenomenon of banned or repressed songs, instruments and genres.

In the process, we learnt quite a lot about how instruments work on a materials level - how vibrations travel in the direction of wood grain, for example. Or how resonating cavities work. We also learnt a lot about various examples of banned and repressed musics throughout history.

The artefact I wanted to build is something I called the X&Ylophone. It's a marimba-like system where a performer chooses a dataset and then cuts bars of wood like a bar chart, where the bigger the number the longer the bar (and the deeper the note played when the bar is struck). Those bars are then laid out on the instrument and can be played. The performer can play them in sequence, out of order, or emphasise some notes more than others to tell the story they want to tell.

Unfortunately I was unwell for one of the workshops where most of the building was due to happen, and so I didn't get to create a physical version. But it's fairly well scoped out, so perhaps later this year I could make it happen in reality?

Not much progress to report on project "make a small physical device that plays ambient sonifications of local API data. Weather data, air quality, ISS position, earthquakes, etc". Partly because I hit a bit of a roadblock in terms of reading data on the Raspberry Pi with Pure Data (thinking of shifting to RNBO instead, and staying in Max-land), and partly because I've been playing Elden Ring 🗡.

My friend Timour has just finished a really nice project translating the experience of hearing loss into something that you can see.

As anyone who has ever talked on the phone to someone in a windy place knows, microphones and wind gusts don't mix well. That's a big problem when you wear hearing aids, and are therefore beholden to microphones.

Timour's project visualises the disruption that wind causes to his hearing aids, distorting and glitching out a video stream. "When the wind roars, that’s what it feels like to me. My senses are overwhelmed, my brain flinches," he writes.

Read his writeup and see the video over here.

Okay, that's probably enough for today. Happy Eurovision for tomorrow. I'm really enjoying that Sweden has essentially sent a Finnish entry this year.

I'll be back in your inboxes in mid-June. Until then!

- Duncan